About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

26th January 2026 12:20 PM

Phonetics Lab Meeting

We'll discuss Sections I-III of the paper "The myth of categorical perception" by Dr. Bob McMurray, J. Acoust. Soc. Am. 152, 3819–3842 (2022), https://doi.org/10.1121/10.0016614. Dr. McMurray is the F. Wendell Miller Professor of Psychological and Brain Sciences at the University of Iowa.

Pay special attention to the distinction between "categorical perception" and "categorization"

Abstract:

Categorical perception (CP) is likely the single finding from speech perception with the biggest impact on cognitive science. However, within speech perception, it is widely known to be an artifact of task demands. CP is empirically defined as a relationship between phoneme identification and discrimination.

As discrimination tasks do not appear to require categorization, this was thought to support the claim that listeners perceive speech solely in terms of linguistic categories.

However, 50 years of work using discrimination tasks, priming, the visual world paradigm, and event related potentials has rejected the strongest forms of CP and provided little strong evidence for any form of it.

This paper reviews the origins and impact of this scientific meme and the work challenging it. It discusses work showing that the encoding of auditory input is largely continuous, not categorical, and describes the modern theoretical synthesis in which listeners preserve fine-grained detail to enable more flexible processing. This synthesis is fundamentally inconsistent with CP.

This leads to a different understanding of how to use and interpret the most basic paradigms in speech perception—phoneme identification along a continuum—and has implications for understanding language and hearing disorders, development, and multilingualism.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

28th January 2026 12:20 PM

Phonetics Lab Meeting

We'll continue our discussion of the McMurray paper.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

6th February 2026 03:00 PM

Cornell Spring Cognitive Science Colloquium: Mouse Songs & Squeaks: A Windows into Brain circuits for Vocal Communication

This is not a P-Lab lecture, but the topic is of interest to ongoing Linguistics research.

The Cornell Cognitive Science Colloquium presents Cornell's Dr. Katherine Tschida, Assistant Professor of Psychology. She will give a talk titled:

Mouse songs and squeaks: a window into brain circuits for vocal communication.

Abstract:

To communicate successfully, both humans and non-human animals must vocalize in the right way and at the right time according to behavioral context.

Mice offer a unique opportunity to study the brain circuits that regulate the context-dependent control of vocal communication, because they offer an unparalleled toolkit of viral-genetic strategies to study defined populations of neurons and because they produce a rich repertoire of vocalization types.

I will present published and ongoing work aimed at characterizing the brain circuits that regulate mouse vocal communication across different behavioral contexts and across development.

Location: G90, Uris Hall, Cornell University

6th February 2026 04:30 PM

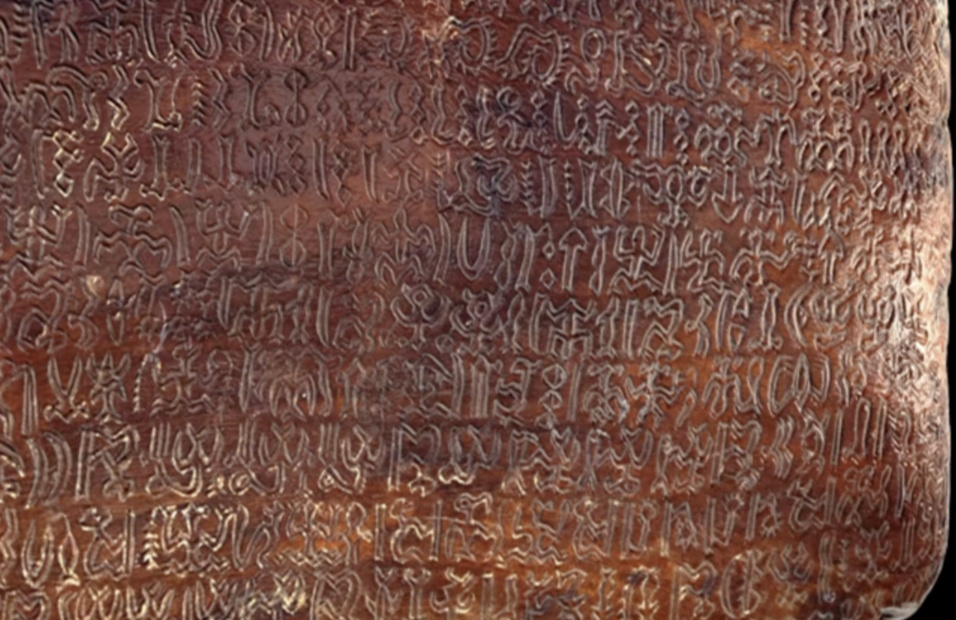

Classics Speaker Series - presents Sylvia Ferra: Where does writing come from? Inventing and deciphering ancient scripts

This is a talk from the Classics department, which may be of interest to linguists.

The Cornell Classics Speaker Series presents Dr. Silvia Ferrara, Pprofessor of Classic Philology and Italian Studies, University of Bologna, Italy who will give a talk titled:

Where does writing come from? Inventing and deciphering ancient scripts.

Abstract:

The invention of writing is an opaque phenomenon, as are all origins, as are all things remote and detached from the present, embedded in the deepest recesses of time.

To understand how it was born we must go back more than 5000 years, and travel thousands of kilometers between China, Mesopotamia, Egypt and the Mayan civilizations, and end our journey on Easter Island, where a writing system seems to have been invented from zero.

But not even that is enough, we have to go even further back in time, to understand how the first signs were created, and how these tell us about our imagination, our visual perception and the relationship with what surrounds us.

Writing is not only the greatest invention in the world, but also a kaleidoscope to understand it and to better understand ourselves. However, there are still ancient scripts that we cannot read and decipher, and we will discover how state-of-the-art research can solve these problems and by doing so, reconstruct the history of the written word, globally.

Location: G22, Goldwin Smith Hall, Cornell University, Ithaca, NY 14853

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

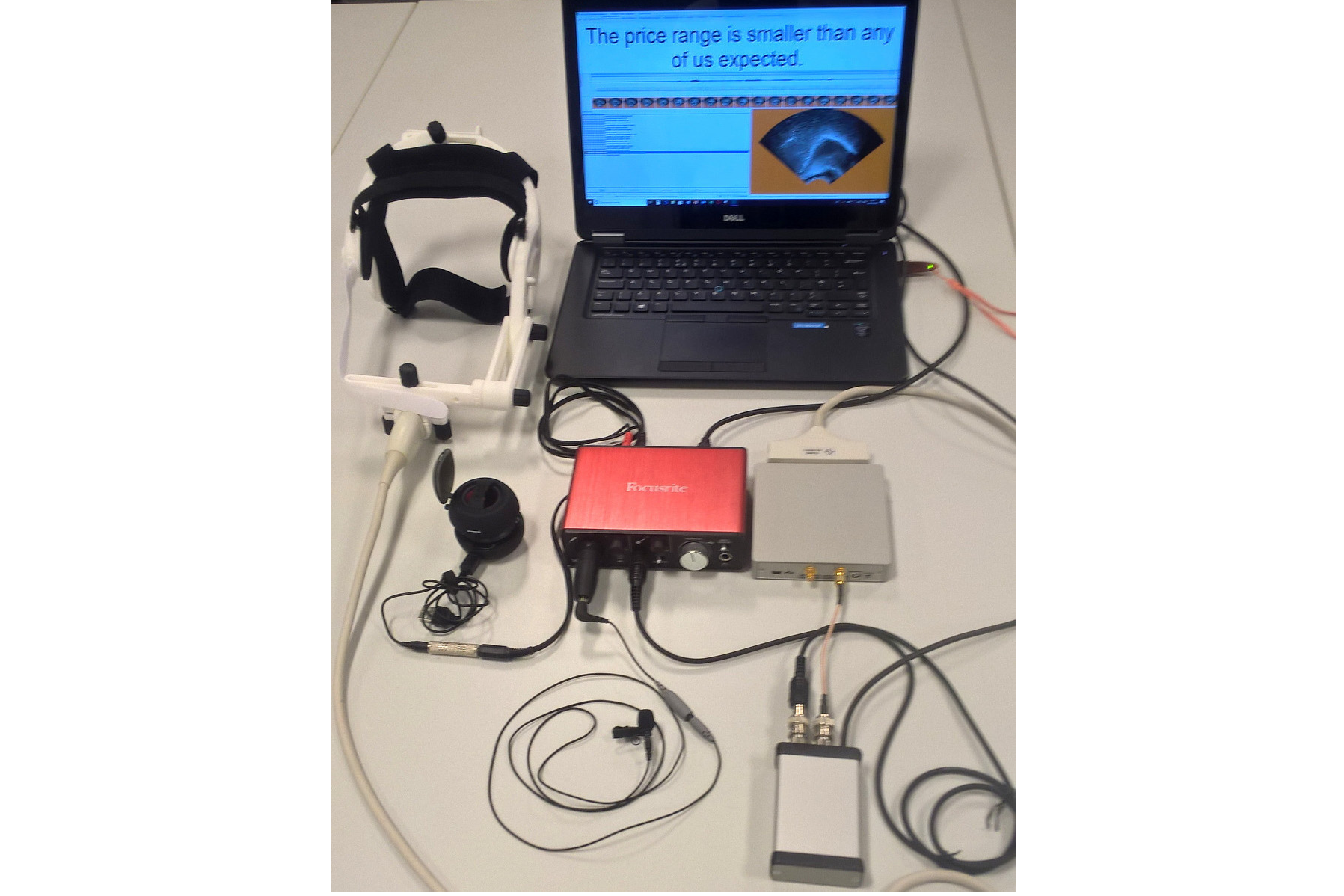

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

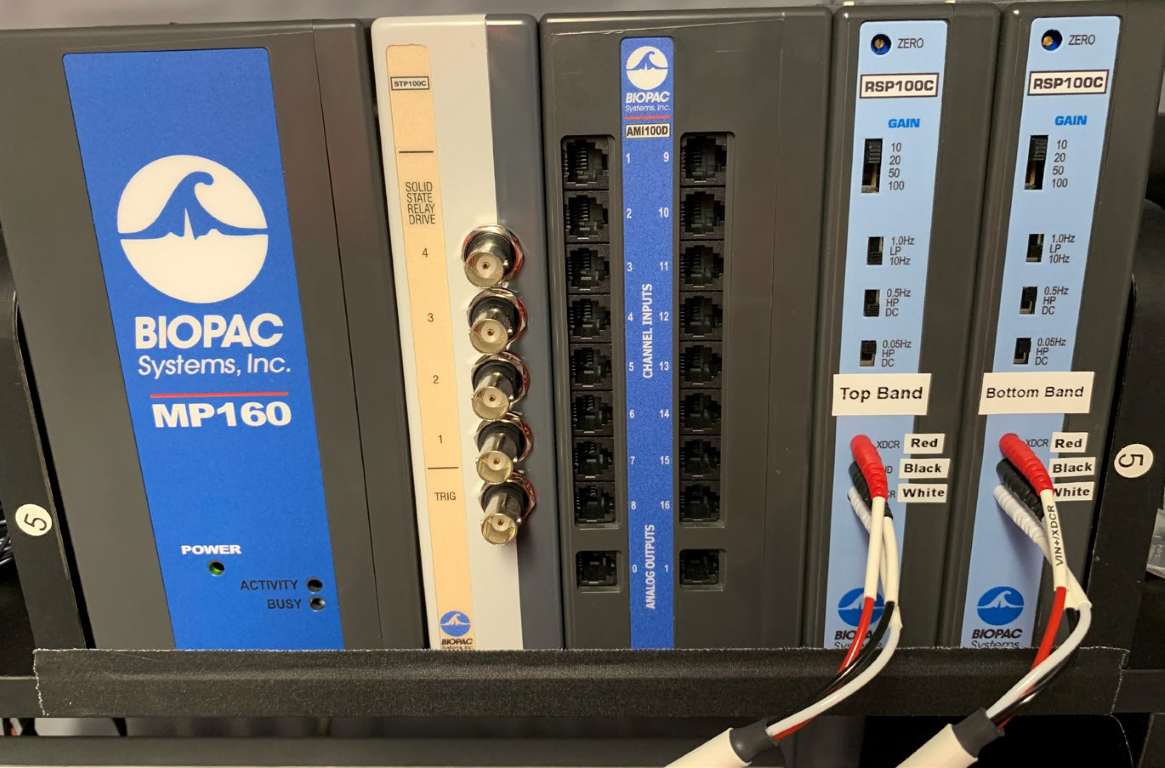

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.