About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

10th February 2023 12:25 PM

Samuel Andersson will give an informal talk on "Iterativity in Phonological Processes"

Abstract:

Some phonological processes have the opportunity to apply more than once within a phonological domain, as in some varieties of Spanish: /islas/ [ihlah] 'islands' with two applications of debuccalization of /s/ to [h]. It is a longstanding issue in phonology whether such processes apply simultaneously (all applications at once) or iteratively (one application at a time).

I discuss preliminary typological data addressing this question, arguing that optionality and within-speaker variation may prove crucial in furthering our understanding of phonological iterativity.

Location: 111 Morrill Hall

10th February 2023 04:30 PM

Samuel Andersson will have an informal Phonology discussion with our grad students

Samuel Andersson will discuss Phonology with core grad students prior prior to dinner with these students.

Location: 208 Morrill Hall (Linguistics Lounge)15th February 2023 12:10 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

Simon will discuss an experiment in preparation.

And in light of the recent B11 water intrusion, we will discuss emergency procedures.

Location: B11, Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

16th February 2023 04:30 PM

Linguistics Colloquium Speaker: Jennifer Kuo will discuss "When Phonological Learning is Not Statistical: How Learning Biases have Reshaped Malagasy Paradigms"

The Department of Linguistics proudly presents Jennifer Kuo (she/her), PhD student in the Department of Linguistics, UCLA. The title of Jennifer's talk is:

"When Phonological Learning is Not Statistical: How Learning Biases have Reshaped Malagasy Paradigms".

ASL interpretation for the talk will be provided. Her talk will be followed by reception in the Linguistics lounge (Room 208 Morrill Hall).

Abstract:

One view of phonological learning is that it is driven by a domain-general bias towards frequency-matching, and therefore predictable from statistical distributions of the language (Albright 2002; Ernestus & Baayen 2003; Nosofsky 2011). A competing view is that learning is influenced by various linguistically-motivated biases, such as a bias towards less complex patterns.

One way to tease apart these effects is to look at how learners behave when presented with conflicting data patterns––they do not always replicate the language they hear from their parents, and their errors (such as goed instead of went) can be informative, telling us about what biases are present in learning. Such errors can be adopted into speech communities and passed through generations of speakers, resulting in reanalysis of a data pattern.

In this talk, I look at reanalyses over time in Malagasy and show that reanalysis is not entirely predictable from statistical distributions.

Instead I argue that Malagasy reanalysis is sensitive to both frequency-matching and a markedness bias, using an iterated version of Maximum Entropy Harmonic Grammar (Smolensky 1986; Goldwater & Johnson, 2003) that simulates the cumulative effect of reanalyses across generations of speakers.

I further propose that the markedness effects active in reanalysis are restricted to those already present in the language as a phonotactic tendency, and show that this is the case for Malagasy.

These results demonstrate how findings from language change over time, combined with quantitative modeling, can give us insight into phonological learning.

Bio:

Jennifer is primarily interested in the structure of paradigms, and how different learning biases affect the learning of paradigms. She addresses this question by looking at paradigm reanalysis over time, and how markedness effects and frequency-matching interact to influence the direction of reanalysis. In more recent work, she is supplementing these findings with experimental evidence from Artificial Grammar Learning experiments.

Jennifer’s CV is here: https://www.kuojennifer.com/cv/.

Location: Room 106 Morrill Hall, 159 Central Avenue, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

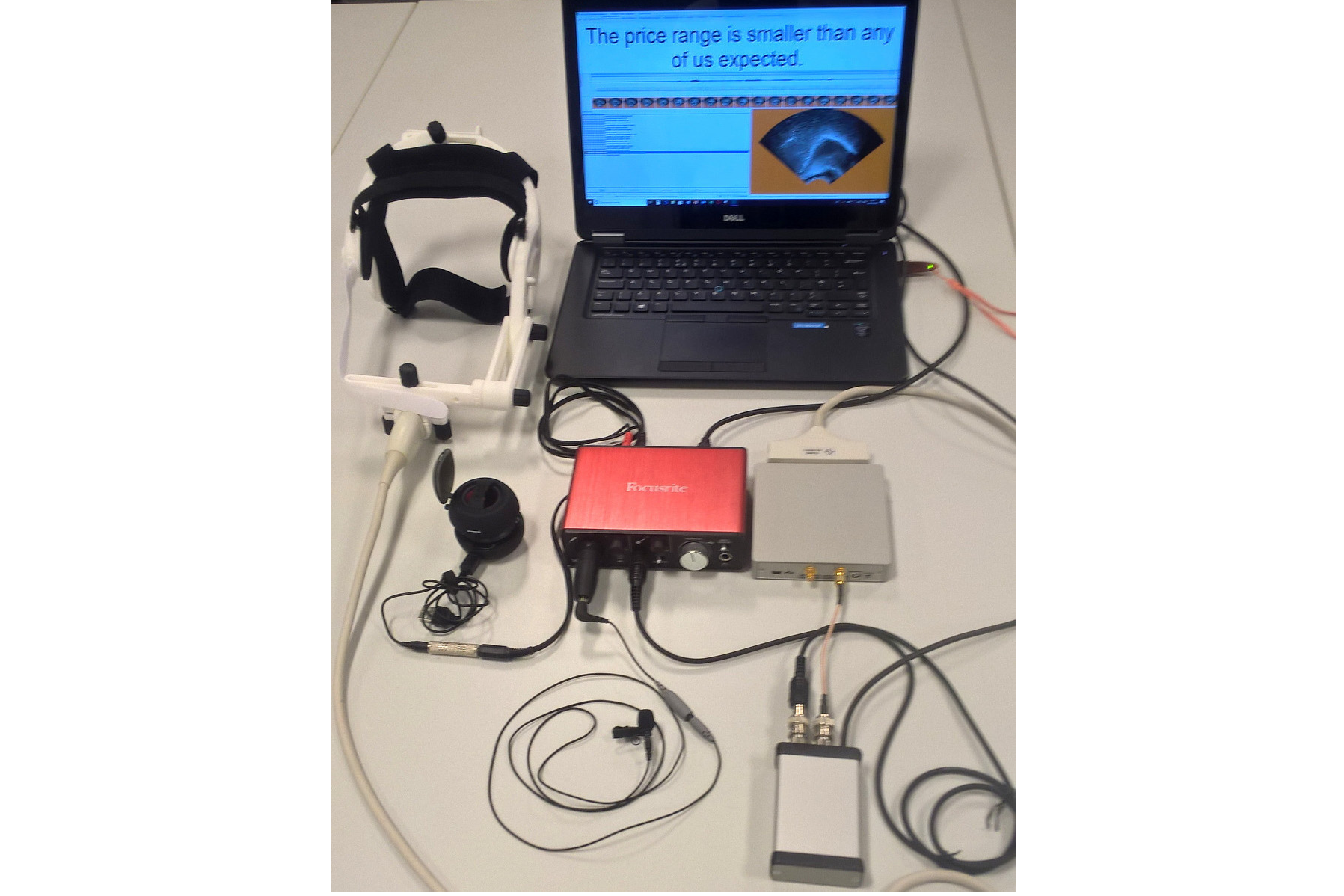

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

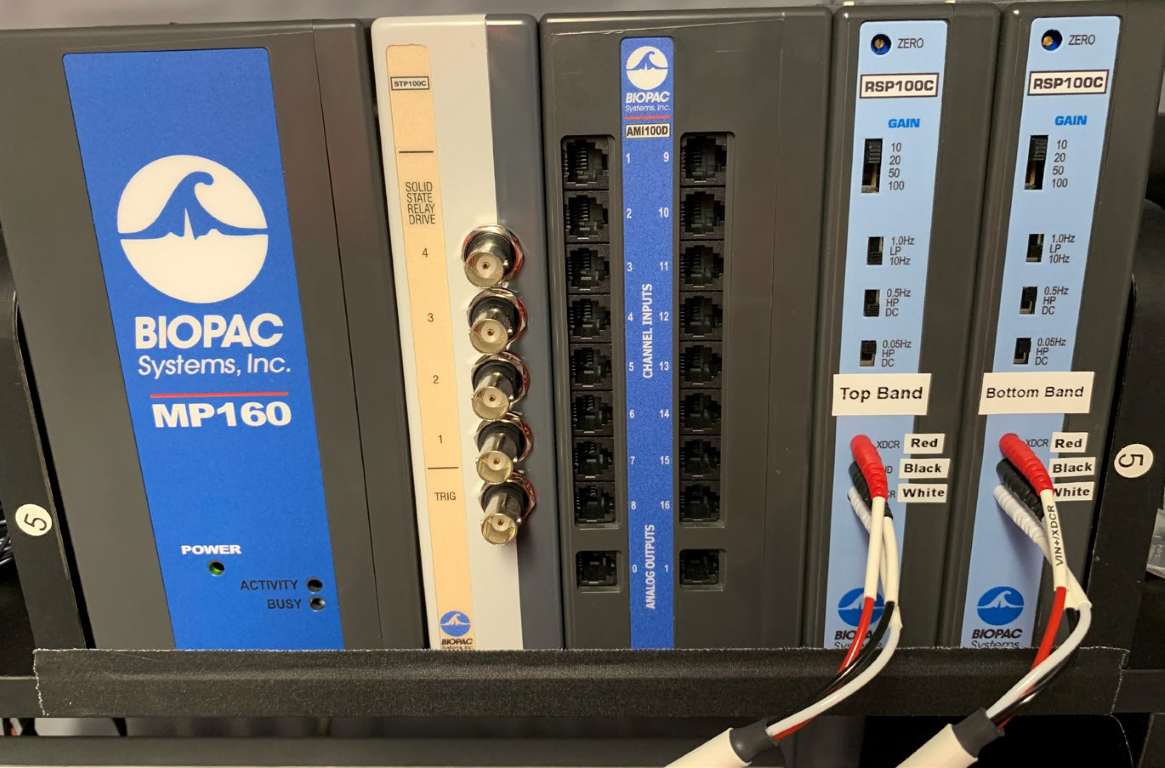

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.