LING 1170: Introduction to Cognitive Science (Fall, Summer)

This course provides an introduction to the science of the mind. Everyone knows what it's like to think and perceive, but this subjective experience provides little insight into how minds emerge from physical entities like brains. To address this issue, cognitive science integrates work from at least five disciplines: Psychology, Neuroscience, Computer Science, Linguistics, and Philosophy. This course introduces students to the insights these disciplines offer into the workings of the mind by exploring visual perception, attention, memory, learning, problem solving, language, and consciousness.

LING 3344 (Spring): Superlinguistics: Comics, Signs and Other Sequential Images

Super-linguistics is a subfield of linguistics that applies techniques used for analyzing natural language to non-linguistic materials. This course uses linguistic tools from semantics, pragmatics and syntax to study sequential images found in comics, films, and children’s books. We will also study multimedia, gestures, and static images such as instruction signs, emoji, and paintings. Linguistic topics include anaphora, implicature, tense and aspect, attitudes and embedding, indirect discourse, and dynamic semantics. We introduce linguistic accounts of each of the topics and apply them to pictorial data.

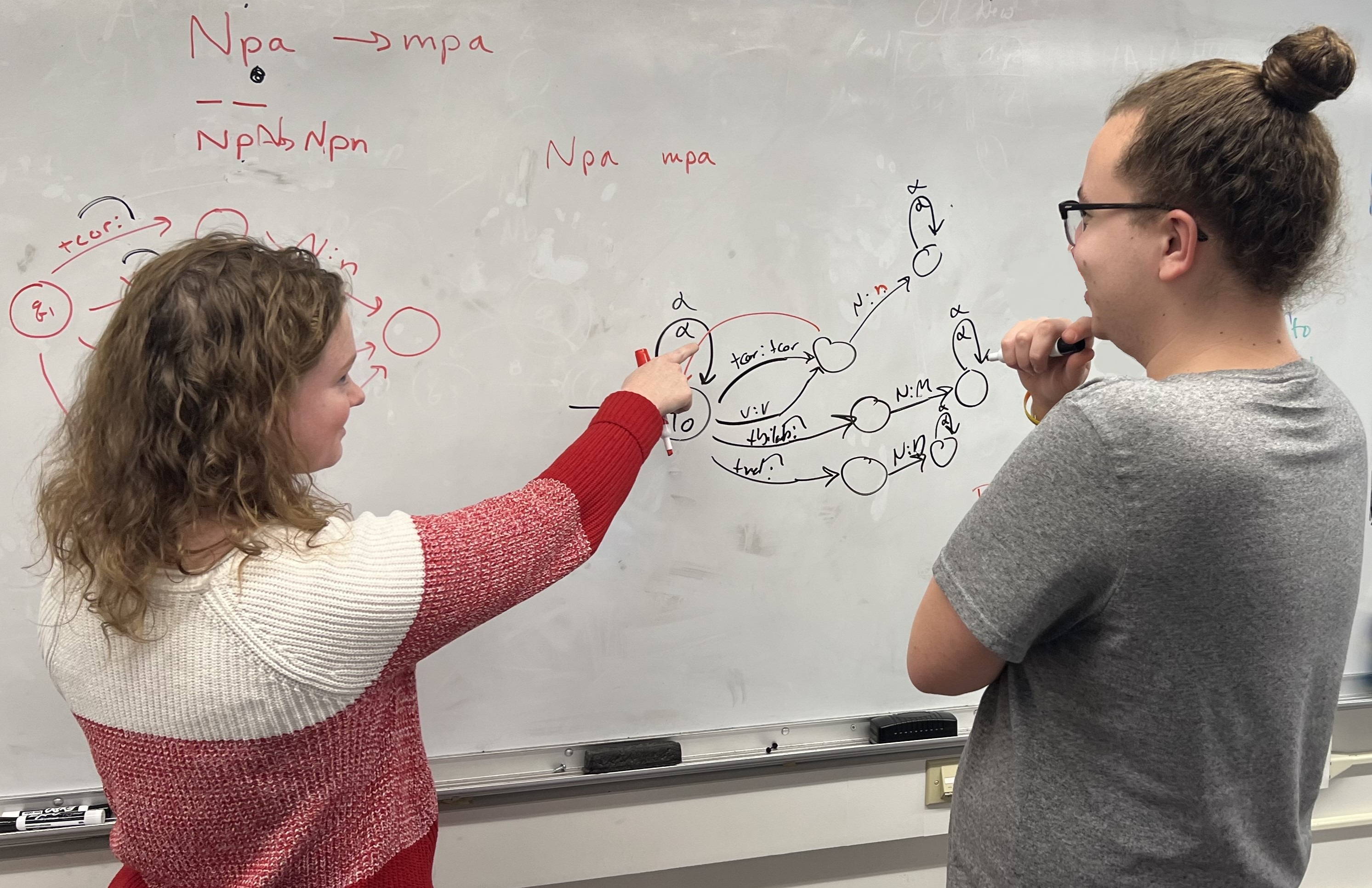

LING 4424/6424 (Spring): Computational Linguistics I

Computational models of natural languages. Topics are drawn from: tree syntax and context free grammar, finite state generative morpho-phonology, feature structure grammars, logical semantics, tabular parsing, Hidden Markov models, categorial and minimalist grammars, text corpora, information-theoretic sentence processing, discourse relations, and pronominal coreference.

LING 4434/6634 (Fall): Computational Linguistics II

An in-depth exploration of modern computational linguistic techniques. A continuation of LING 4424 - Computational Linguistics I. Whereas LING 4424 covers foundational techniques in symbolic computational modeling, this course will cover a wider range of applications as well as coverage of neural network methods. We will survey a range of neural network techniques that are widely used in computational linguistics and natural language processing as well as a number of techniques that can be used to probe the linguistic information and language processing strategies encoded in computational models. We will examine ways of mapping this linguistic information both to linguistic theory as well as to measures of human processing (e.g., neuroimaging data and human behavioral responses).

LING 4474/6674 (Spring): Natural Language Processing

This course constitutes an introduction to natural language processing (NLP), the goal of which is to enable computers to use human languages as input, output, or both. NLP is at the heart of many of today’s most exciting technological achievements, including machine translation, question answering and automatic conversational assistants. The course will introduce core problems and methodologies in NLP, including machine learning, problem design, and evaluation methods.

LING 4485/6485: Topics in Computational Linguistics

Current topics in computational linguistics.

LING 6693 - Computational Psycholinguistics Discussion (Fall, Spring)

This seminar provides a venue for feedback on research projects, invited speakers, and paper discussions within the area of computational psycholinguistics

LING 7710 (Fall) - Computational Seminar (Fall)

Addresses current theoretical and empirical issues in computational linguistics